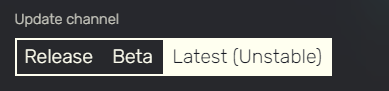

For that reason - to prevent inadvertently breaking stuff using it - it’s ‘hidden’ (i.e. more difficult to access) in production mode.

‘Global bans’ are a bad solution in the first place and lead to a lot of work overload for us, both from adding detections, mitigating false positives, dealing with the trenches of the abuse ecosystem, but also a lot of human factor from dealing with people ‘being punished’ in the end for reasons that are not directly and entirely ‘their fault’, but are the fault of the broader abuse ecosystem and factors beyond.

In fact, a lot of these abusive anti-cheat systems in themselves are almost covert advertisements for cheats, reinforcing the cheating ecosystem and giving them more incentive, not less - not to begin about the anti-cheat systems that themselves have close and direct ties to cheat vendors, or those that act like cheats in themselves by exploiting vulnerabilities in platform code for profit and doing everything in their power to prevent us from fixing this.

The start of a proper fix to all of this would be to break the cycle of abuse → anti-abuse quick fixes → fear → more fear by promoting proper design and constructive, freely-available ways of ‘having fun’, rather than an ecosystem driven by perverse incentives to keep abuse alive that feeds off fear.

There should’ve been, in fact, and once the ecosystem allows, there will be. However, the ecosystem is aligned in such a way that this will likely not be the case, as there’ll always be some legacy insecure code running somewhere.

… and why would this be? If someone wants to inspect what you’re doing on their machine, why not let them? If your UI has a bug, why not let them investigate and be able to provide a proper informed report, and perhaps a workaround for themselves?

Why should you punish someone for behavior that has no direct correlation with abuse, when, as you said, no abuse is possible via such on your server, and as such, you’re basically punishing people for… being curious?!

Instead of seeing possible ‘unfair advantages’ and then trying to come up with preemptive mitigations from that viewpoint when no such ‘abuse’ has even been shown yet, why not… leave things be as they are, and deal with such?

For example, removing a blanked-out screen isn’t something with lasting effects, and as such not even worth trying to mitigate beforehand - and if someone does do such in your example, have a laugh, admit ‘heh, didn’t think of that’ or ‘hah, yeah, knew that wouldn’t last’, try to understand what happened, and try to think of a constructive fix to that, whether it be adapting your gameplay design to fit the scenario users expect, having a stern talking-to people who somehow end up seeing through your blanked out view, using the game’s screen fade-out commands, etc., rather than taking preemptive measures that way outweigh the potential damage done here, even if by ‘normalizing’ the ‘yeah just to be sure, got to block dev tools somehow’-style behavior.

(similarly, by the way, we already tend to mitigate ways that people ‘detect dev tools’ and when writing code to “kick players when detected”, you may as such find all players get kicked in platform updates as a result of this mitigation, since, as said, this is a non-fix - at most, log such so you can correlate this against future markers of abuse, don’t try to instantly act and punish)